4 Deepseek Secrets You Never Knew

작성자 정보

- Chauncey Roseby 작성

- 작성일

본문

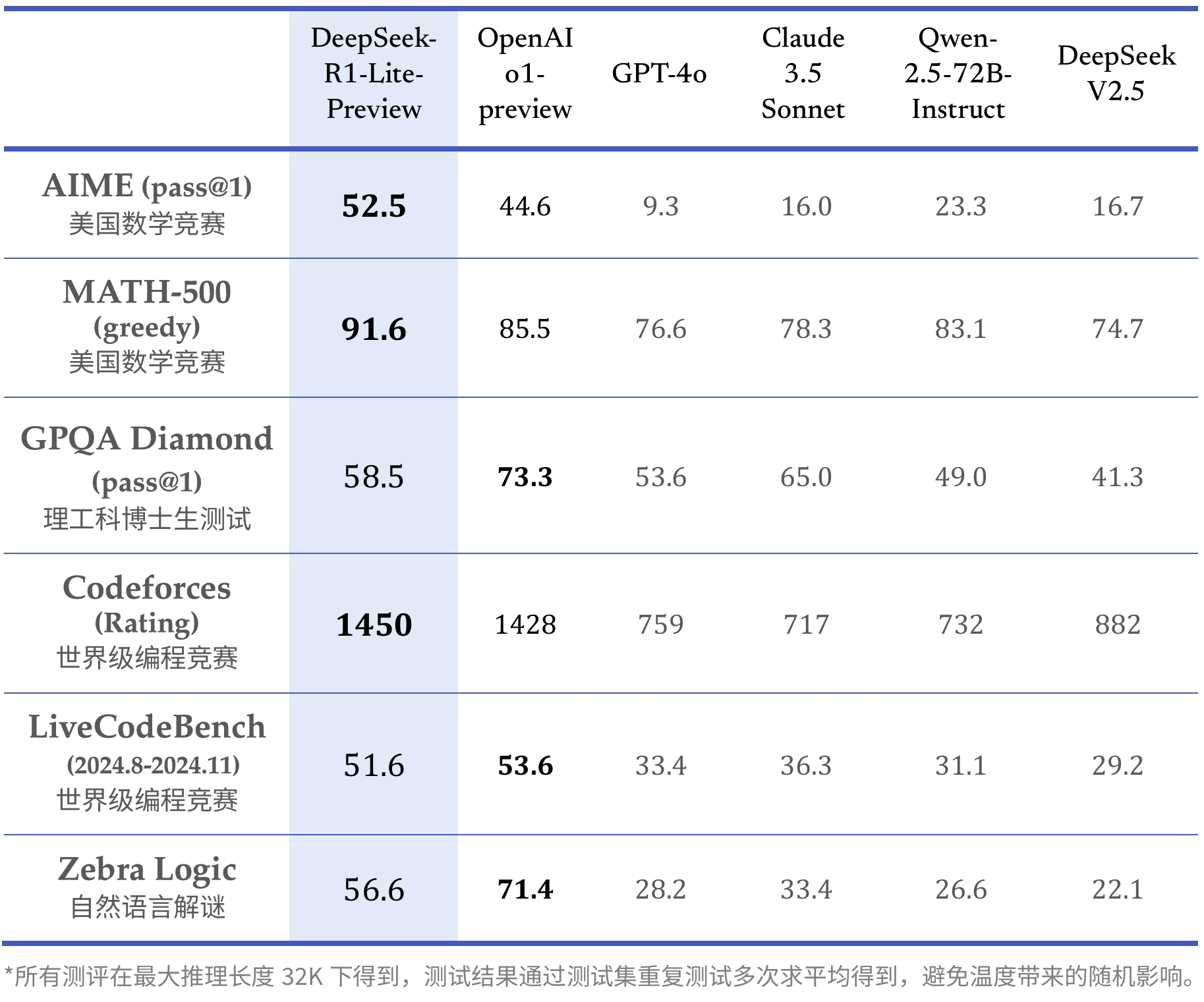

So, what's DeepSeek and what might it imply for U.S. "It’s about the world realizing that China has caught up - and in some areas overtaken - the U.S. All of which has raised a essential query: despite American sanctions on Beijing’s potential to entry advanced semiconductors, is China catching up with the U.S. The upshot: the U.S. Entrepreneur and commentator Arnaud Bertrand captured this dynamic, contrasting China’s frugal, decentralized innovation with the U.S. While DeepSeek’s innovation is groundbreaking, under no circumstances has it established a commanding market lead. This implies builders can customize it, high quality-tune it for specific tasks, and contribute to its ongoing development. 2) On coding-related tasks, DeepSeek-V3 emerges as the top-performing model for coding competition benchmarks, equivalent to LiveCodeBench, solidifying its place because the main model on this area. This reinforcement studying allows the mannequin to study on its own via trial and error, very like how you can learn to experience a bike or perform sure tasks. Some American AI researchers have solid doubt on DeepSeek’s claims about how much it spent, and how many advanced chips it deployed to create its model. A brand new Chinese AI mannequin, created by the Hangzhou-based mostly startup DeepSeek, has stunned the American AI business by outperforming some of OpenAI’s main models, displacing ChatGPT at the top of the iOS app retailer, and usurping Meta because the main purveyor of so-referred to as open supply AI tools.

So, what's DeepSeek and what might it imply for U.S. "It’s about the world realizing that China has caught up - and in some areas overtaken - the U.S. All of which has raised a essential query: despite American sanctions on Beijing’s potential to entry advanced semiconductors, is China catching up with the U.S. The upshot: the U.S. Entrepreneur and commentator Arnaud Bertrand captured this dynamic, contrasting China’s frugal, decentralized innovation with the U.S. While DeepSeek’s innovation is groundbreaking, under no circumstances has it established a commanding market lead. This implies builders can customize it, high quality-tune it for specific tasks, and contribute to its ongoing development. 2) On coding-related tasks, DeepSeek-V3 emerges as the top-performing model for coding competition benchmarks, equivalent to LiveCodeBench, solidifying its place because the main model on this area. This reinforcement studying allows the mannequin to study on its own via trial and error, very like how you can learn to experience a bike or perform sure tasks. Some American AI researchers have solid doubt on DeepSeek’s claims about how much it spent, and how many advanced chips it deployed to create its model. A brand new Chinese AI mannequin, created by the Hangzhou-based mostly startup DeepSeek, has stunned the American AI business by outperforming some of OpenAI’s main models, displacing ChatGPT at the top of the iOS app retailer, and usurping Meta because the main purveyor of so-referred to as open supply AI tools.

Meta and Mistral, the French open-source mannequin firm, may be a beat behind, but it should probably be only some months before they catch up. To further push the boundaries of open-source mannequin capabilities, we scale up our fashions and introduce DeepSeek-V3, a large Mixture-of-Experts (MoE) mannequin with 671B parameters, of which 37B are activated for each token. DeepSeek-Coder-V2 is an open-supply Mixture-of-Experts (MoE) code language model, which can obtain the performance of GPT4-Turbo. In recent years, Large Language Models (LLMs) have been undergoing fast iteration and evolution (OpenAI, 2024a; Anthropic, 2024; Google, 2024), progressively diminishing the hole in the direction of Artificial General Intelligence (AGI). A spate of open supply releases in late 2024 put the startup on the map, including the large language mannequin "v3", which outperformed all of Meta's open-supply LLMs and rivaled OpenAI's closed-supply GPT4-o. In the course of the post-coaching stage, we distill the reasoning capability from the DeepSeek-R1 collection of models, and meanwhile carefully maintain the stability between mannequin accuracy and technology length. DeepSeek-R1 represents a big leap ahead in AI reasoning model performance, but demand for substantial hardware sources comes with this energy. Despite its economical training costs, comprehensive evaluations reveal that DeepSeek-V3-Base has emerged as the strongest open-source base mannequin at the moment available, especially in code and math.

So as to achieve efficient training, we support the FP8 mixed precision training and implement comprehensive optimizations for the coaching framework. We evaluate DeepSeek-V3 on a comprehensive array of benchmarks. • We introduce an progressive methodology to distill reasoning capabilities from the long-Chain-of-Thought (CoT) model, particularly from one of the DeepSeek R1 collection models, into normal LLMs, notably DeepSeek-V3. To deal with these points, we developed DeepSeek-R1, which includes cold-begin information before RL, attaining reasoning efficiency on par with OpenAI-o1 across math, code, and reasoning duties. Generating synthetic knowledge is more useful resource-efficient in comparison with traditional training strategies. With strategies like immediate caching, speculative API, we assure high throughput efficiency with low complete cost of offering (TCO) in addition to bringing better of the open-source LLMs on the identical day of the launch. The outcome shows that Free DeepSeek online-Coder-Base-33B significantly outperforms present open-source code LLMs. DeepSeek-R1-Lite-Preview reveals steady score improvements on AIME as thought size will increase. Next, we conduct a two-stage context length extension for DeepSeek-V3. Combined with 119K GPU hours for the context length extension and 5K GPU hours for post-training, DeepSeek-V3 prices only 2.788M GPU hours for its full coaching. In the first stage, the maximum context length is prolonged to 32K, and within the second stage, it is further prolonged to 128K. Following this, we conduct put up-coaching, including Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) on the base mannequin of DeepSeek-V3, to align it with human preferences and additional unlock its potential.

So as to achieve efficient training, we support the FP8 mixed precision training and implement comprehensive optimizations for the coaching framework. We evaluate DeepSeek-V3 on a comprehensive array of benchmarks. • We introduce an progressive methodology to distill reasoning capabilities from the long-Chain-of-Thought (CoT) model, particularly from one of the DeepSeek R1 collection models, into normal LLMs, notably DeepSeek-V3. To deal with these points, we developed DeepSeek-R1, which includes cold-begin information before RL, attaining reasoning efficiency on par with OpenAI-o1 across math, code, and reasoning duties. Generating synthetic knowledge is more useful resource-efficient in comparison with traditional training strategies. With strategies like immediate caching, speculative API, we assure high throughput efficiency with low complete cost of offering (TCO) in addition to bringing better of the open-source LLMs on the identical day of the launch. The outcome shows that Free DeepSeek online-Coder-Base-33B significantly outperforms present open-source code LLMs. DeepSeek-R1-Lite-Preview reveals steady score improvements on AIME as thought size will increase. Next, we conduct a two-stage context length extension for DeepSeek-V3. Combined with 119K GPU hours for the context length extension and 5K GPU hours for post-training, DeepSeek-V3 prices only 2.788M GPU hours for its full coaching. In the first stage, the maximum context length is prolonged to 32K, and within the second stage, it is further prolonged to 128K. Following this, we conduct put up-coaching, including Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) on the base mannequin of DeepSeek-V3, to align it with human preferences and additional unlock its potential.

Firstly, DeepSeek-V3 pioneers an auxiliary-loss-Free DeepSeek r1 strategy (Wang et al., 2024a) for load balancing, with the purpose of minimizing the opposed affect on model efficiency that arises from the effort to encourage load balancing. The technical report notes this achieves higher efficiency than relying on an auxiliary loss whereas still making certain appropriate load stability. • On prime of the environment friendly structure of DeepSeek-V2, we pioneer an auxiliary-loss-Free DeepSeek v3 technique for load balancing, which minimizes the performance degradation that arises from encouraging load balancing. • At an economical value of solely 2.664M H800 GPU hours, we full the pre-training of DeepSeek-V3 on 14.8T tokens, producing the at present strongest open-supply base mannequin. • Through the co-design of algorithms, frameworks, and hardware, we overcome the communication bottleneck in cross-node MoE training, reaching near-full computation-communication overlap. As for the coaching framework, we design the DualPipe algorithm for environment friendly pipeline parallelism, which has fewer pipeline bubbles and hides most of the communication throughout training via computation-communication overlap.

If you adored this article and you would want to get more information regarding free Deep seek kindly check out our own web page.