Outrageous Deepseek Tips

작성자 정보

- Kyle Wolinski 작성

- 작성일

본문

In reality, what DeepSeek means for literature, the performing arts, visual tradition, and so on., can appear utterly irrelevant in the face of what might seem like a lot increased-order anxieties relating to national safety, economic devaluation of the U.S. In several instances we determine recognized Chinese companies resembling ByteDance, Inc. which have servers positioned in the United States however might switch, DeepSeek course of or access the info from China. The company was founded by Liang Wenfeng, a graduate of Zhejiang University, in May 2023. Wenfeng also co-founded High-Flyer, a China-based mostly quantitative hedge fund that owns DeepSeek. Liang was a disruptor, not just for the remainder of the world, but in addition for China. Therefore, beyond the inevitable matters of cash, talent, and computational power concerned in LLMs, we additionally discussed with High-Flyer founder Liang about what sort of organizational construction can foster innovation and the way long human madness can last. For rewards, as a substitute of using a reward mannequin skilled on human preferences, they employed two varieties of rewards: an accuracy reward and a format reward. In this stage, they again used rule-based strategies for accuracy rewards for math and coding questions, whereas human desire labels used for different query sorts.

As outlined earlier, DeepSeek developed three varieties of R1 models. Pre-trained on 18 trillion tokens, the brand new fashions ship an 18% efficiency boost over their predecessors, handling up to 128,000 tokens-the equivalent of around 100,000 Chinese characters-and producing as much as 8,000 phrases. When the scarcity of high-efficiency GPU chips amongst home cloud providers turned the most direct factor limiting the start of China's generative AI, in response to "Caijing Eleven People (a Chinese media outlet)," there are not more than 5 companies in China with over 10,000 GPUs. This enables its know-how to keep away from probably the most stringent provisions of China's AI laws, similar to requiring shopper-going through technology to adjust to authorities controls on data. I believe that OpenAI’s o1 and o3 models use inference-time scaling, which would explain why they're relatively costly compared to fashions like GPT-4o. Along with inference-time scaling, o1 and o3 were probably educated using RL pipelines just like these used for DeepSeek R1. Another approach to inference-time scaling is the use of voting and search methods.

As outlined earlier, DeepSeek developed three varieties of R1 models. Pre-trained on 18 trillion tokens, the brand new fashions ship an 18% efficiency boost over their predecessors, handling up to 128,000 tokens-the equivalent of around 100,000 Chinese characters-and producing as much as 8,000 phrases. When the scarcity of high-efficiency GPU chips amongst home cloud providers turned the most direct factor limiting the start of China's generative AI, in response to "Caijing Eleven People (a Chinese media outlet)," there are not more than 5 companies in China with over 10,000 GPUs. This enables its know-how to keep away from probably the most stringent provisions of China's AI laws, similar to requiring shopper-going through technology to adjust to authorities controls on data. I believe that OpenAI’s o1 and o3 models use inference-time scaling, which would explain why they're relatively costly compared to fashions like GPT-4o. Along with inference-time scaling, o1 and o3 were probably educated using RL pipelines just like these used for DeepSeek R1. Another approach to inference-time scaling is the use of voting and search methods.

The accessibility of such superior models could result in new purposes and use cases across numerous industries. Using the SFT knowledge generated in the previous steps, the DeepSeek crew high-quality-tuned Qwen and Llama fashions to enhance their reasoning abilities. The RL stage was followed by another round of SFT information collection. Note that it is actually common to include an SFT stage earlier than RL, as seen in the usual RLHF pipeline. This confirms that it is possible to develop a reasoning model utilizing pure RL, and the DeepSeek crew was the first to show (or at the very least publish) this approach. The primary, DeepSeek-R1-Zero, was constructed on high of the DeepSeek-V3 base mannequin, a standard pre-skilled LLM they released in December 2024. Unlike typical RL pipelines, where supervised fantastic-tuning (SFT) is applied earlier than RL, DeepSeek-R1-Zero was trained completely with reinforcement learning with out an initial SFT stage as highlighted within the diagram below.

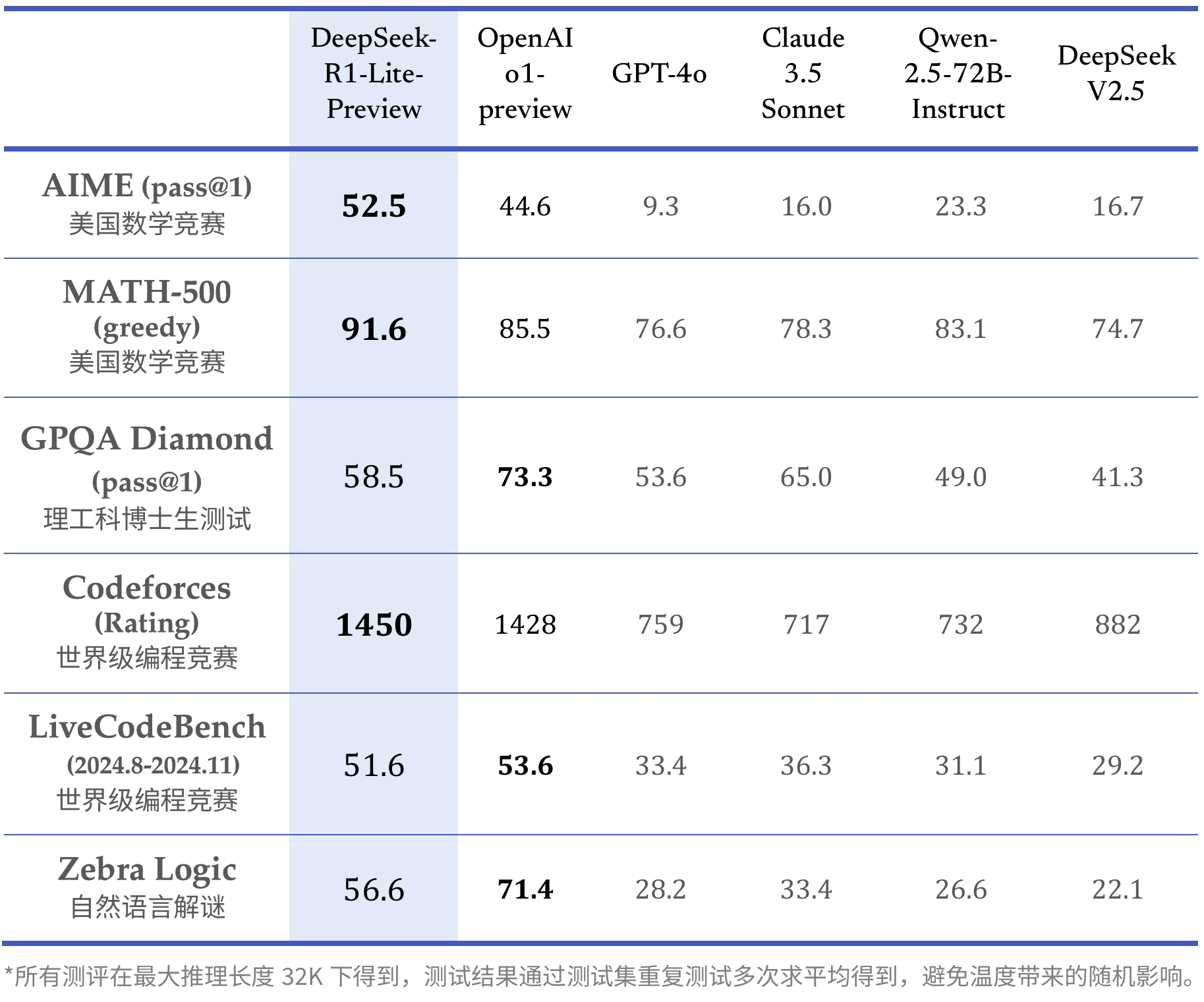

DeepSeek AI stands out with its high-efficiency models that persistently obtain top rankings on main AI benchmarks. Next, let’s look at the development of Deepseek free-R1, DeepSeek’s flagship reasoning mannequin, which serves as a blueprint for constructing reasoning fashions. 2) DeepSeek online-R1: This is DeepSeek’s flagship reasoning mannequin, constructed upon DeepSeek-R1-Zero. During training, DeepSeek-R1-Zero naturally emerged with numerous highly effective and fascinating reasoning behaviors. This mannequin improves upon DeepSeek-R1-Zero by incorporating further supervised fine-tuning (SFT) and reinforcement studying (RL) to improve its reasoning efficiency. DeepSeek is a large language model AI product that gives a service similar to merchandise like ChatGPT. But breakthroughs usually start with elementary research that has no foreseeable product or revenue in thoughts. Having these giant models is sweet, however only a few basic points could be solved with this. While not distillation in the traditional sense, this process concerned coaching smaller models (Llama 8B and 70B, and Qwen 1.5B-30B) on outputs from the bigger DeepSeek-R1 671B mannequin. Still, this RL process is just like the generally used RLHF approach, which is often utilized to choice-tune LLMs. This RL stage retained the same accuracy and format rewards utilized in DeepSeek-R1-Zero’s RL course of.

If you have any questions with regards to where by as well as the way to work with Deepseek AI Online chat, you'll be able to e-mail us on our website.